Question 1. Multiple values for the same word?

I

searched IPHOD version 2.0 and noticed that it generates different

word forms and values for the same input word. For example, here are the results when I searched for the word "artichoke":

Word

|

NPhon

|

unsDENS

|

unsBPAV

|

artichoke

|

7

|

1

|

0.0030323

|

artichoke

|

7

|

2

|

0.003574

|

What's up?!

Answer 1. Multiple pronunciations (you need to show CMU transcriptions)

The IPHOD returns more

than one output when the CMU contained multiple pronunciations for that word.

In addition to returning the most common pronunciation, I decided to include multiple pronunciations of the same word. The results shown above (by default) do not indicate which values belong to each pronunciation. However, if you click the

“CMU transcription” box on the search page, then you can see the CMU

pronunciations for those 2 entries:

Word

|

UnTrn

|

NPhon

|

unsDENS

|

unsBPAV

|

artichoke

|

AA.R.T.AH.CH.OW.K

|

7

|

1

|

0.0030323

|

artichoke

|

AA.R.T.IH.CH.OW.K

|

7

|

2

|

0.003574

|

You say ar-TIH-choke, and I say ar-TUH-choke...

Question 2. Where did the IPHOD come from, with respect to the CMU Pronouncing Dictionary?

Answer 2. CMUPD version 0.7a (2008).

The last update of the IPHOD was in November 2009, and I used the latest version of the CMU pronouncing dictionary (version 0.7a was released in 2008). The IPHOD (2.0) contains words that had both CMU transcriptions and SUBTLEX word frequencies. There are fewer words because there are so many words in each database that do not appear in the other. Information on how homophones, homographs, and multiple pronunciations were handled is described within the background information, here: www.iphod.com/details

Question 3. How can you tell which nonwords have a high or low value?

Answer 3. High and low values are relative to a baseline that should be thoughtfully determined.

Relatively high or low probability nonwords can simply be selected as two groups with values that are consistently higher or lower than each other, no overlap. The easiest approach for constructing such a stimuli list would be to form two samples that have no overlap in their probabilities to ensure there is a different range for their values. This approach has limitations since all of the items could have low probabilities compared to the larger distribution of all English words.

Probably a better strategy is to compare a sample of interest to the larger population of IPhOD words, since the values are calculated uniformly for all items. Start by downloading the IPhOD words textfile and using Excel or some other program to establish means and standard deviations (SD) for your value of interest. For the low probability group, you could arbitrarily set an upper limit of Mean – 0.5 SD, and only select nonwords with values below that threshold. A lower limit of Mean + 0.5 SD would then be set for the high probability group, likewise only select nonwords above that value. You might consider medians instead of means, if the distribution of values is highly skewed. It might also be important to restrict the reference distribution for your calculations to IPhOD words with a similar CV structure or number of syllables/phonemes to your nonwords. Restricting the basis set of words can be complicated, also it is possible to limit a reference distribution too much through these restrictions.

Another important consideration is that these values are inter-correlated. For example, a word consisting of high probability phoneme pairs is likely to also come from a dense neighborhood. Words with CVC structures will have a different distribution of densities and phonotactic probabilities than words with CVCC structures. Sometimes you have to consider several variables at once, and hopefully the IPHOD tools online are sufficient for that.

Depending on your experience with excel and/or statistics, it may be a good idea to have someone with statistics training to assist in the selection of words and nonwords.

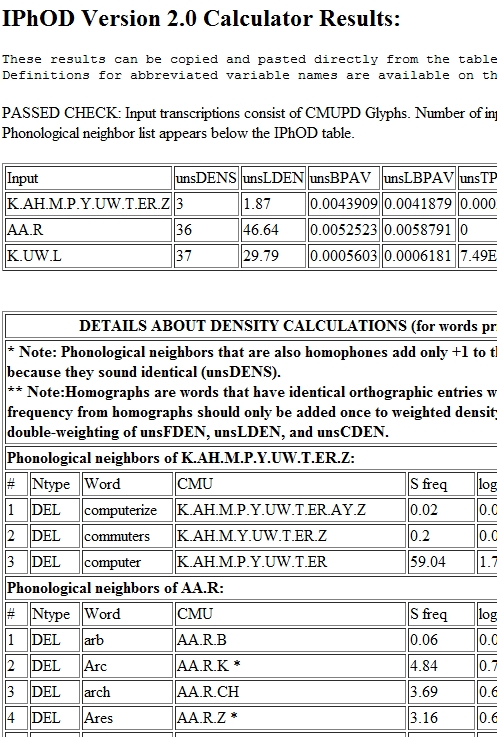

Question 4. Where did these neighbor counts come from?

Answer 4. You can check the count, by viewing a list of neighbors.

The IPHOD (2.0) calculator has a button "show neighbors" that allows you to see all of the neighbors included in counts or weighted sums. So if you wanted to calculate things differently and don't want to start from scratch, then you can produce your own lists.